How to (and not to) log transform zero

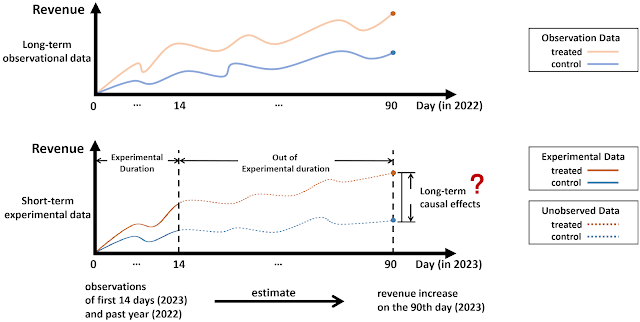

| Image courtesy of the authors: Survey results of the papers with log zero in the American Economic Review |

Podcast-style summary by NotebookLM

Academic's take

Log transformation is widely used in linear models for several reasons: Making data "behave" or conform to parametric assumptions, calculating elasticity, etc. The figure above shows that nearly 40% of the empirical papers in a selected journal used a log specification and 36% had the problem of the log of zero.

When an outcome variable naturally has zeros, however, log transformation is tricky. In most cases, the solution is to instinctively add a positive constant to each value in the outcome variable. One popular idea is to add 1 so that raw zeros remain as log-transformed zeros. Another is to add a very small constant, especially if the scale of the variable is small.

Well, the bad news is that these are all arbitrary choices that bias the resulting estimates. If a model is correlational, a small bias due to the transformation may not be a big concern. If it is causal though, and, for example, an estimated elasticity is used to change prices (with the intention of impacting an outcome such as margin or sales), such arbitrary choices can cause more serious problems (since not only the estimates but also standard errors can be arbitrarily biased). This is really a problem of data centricity, or staying true to the data at hand.

What is a better solution than deserting to Poisson etc? Recent work by Christophe Bellégo, David Benatia, and Louis Pape (2022) offers a solution called iOLS (iterated OLS). The iOLS algorithm adds an observation-specific value to the variable, which is scaled by a hyper parameter. Clever. This approach offers a family of estimators that require only OLS and covers the log-linear model and Poisson models as special cases. The authors also extend the method to endogenous regressors (i.e., iterated 2SLS or i2SLS) and discuss fixed effects models with an example of two-way fixed effects in the paper, here.

The method is currently implemented in both Stata (here) and R (here), but not yet in Python.

Director's cut

One of the most common and widely used demand functions is the "constant elasticity" demand function. One of its major advantages is its mathematical simplicity, which makes it easy to compute, predict, interpret, and communicate. However, it also has drawbacks. Its assumption of constant elasticity is often unrealistic because customers' response to price changes depends on the existing price. If the price is already lower than the competitor's, lowering it further may not increase demand as much as if the price were in line with the competitor's. Thus, while it is useful for modeling purposes, its practical application requires careful consideration of the underlying assumptions.

With that said, let's take a look at the constant elasticity demand function:

$$d(p) = k*p^{-\epsilon}$$

where $d(p)$ is demand or unit sales, $p$ is price,$$log(d(p)) = log(kp^{-\epsilon}) \\ log(d(p)) = log(k) + log(p^{-\epsilon}) \\log(d(p)) = log(k) - \epsilon*log(p) \\$$

The final equation in linear regression terms, $log(sales) = \alpha + \beta * log(price)$, is then used to estimate the percentage change in demand for each percentage change in price, which is the price elasticity of demand.

In estimating the elasticity using this specification, the log of zero is almost always a problem. That's because there are always days with no sales, let's say in a day-level analysis at the SKU level. It is common practice to solve the log of zero problem by adding a constant to the daily sales. This approach is preferred for its simplicity and convenience but comes at the expense of biasing the results. The iOLS solution offered above appears to address the problem more systematically. Adding observation specific values to the variable is a much better solution than using a one constant fixes all approach.

Implications for data centricity

References

- Bellégo, C., Benatia, D., & Pape, L. (2022). Dealing with logs and zeros in regression models. arXiv preprint arXiv:2203.11820.